You can find below the list of past seminars.

14 May 2024, Thibault Clérice (INRIA) & Malametenia Vlachou-Efstathiou (IRHT/IMAGINE-ENPC)

Title: The CATMuS initiative: building large and diverse corpora for handwritten text recognition

Abstract: The CATMuS (Consistent Approaches to Transcribing ManuScripts) initiative is a set of datasets and guidelines meant for training large and generalizing HTR models. In this presentation, we set out to present the issues behind handwritten text recognition of historical documents over a long time and many languages, the choices we faced and how we addressed them. We'll present the resulting dataset for the Middle Ages, the first one to be published out of the CATMuS Initiative, and will present initial results with some models.

2 April 2024, Charlotte Duvette & Paul Kervegan (INHA)

Title: Richelieu. Histoire du quartier, a digital ecosystem for the analysis of the urban experience through historical sources

Abstract: Newspaper illustrations, printed ads, restaurant menus, postcards, but also architectural blueprints and cadastral maps : throughout the 19th century, the urban setting was the ground for numerous representations, comments and documentation, in a context of unprecedented media production. We chose a multifaceted approach to these historical documents. A GIS documents maps evolutions in the plot plan ; OCRised registers offer a glimpse into the neighbourhood’s economic networks ; a 3D model allows us to spatialise representations of the place de la Bourse ; finally, an image-based corpus documents the urban imaginary throughout the XIXth century. By linking these heterogeneous data sources, first through an SQL database, then by developing a Web platform and custom data analysis tools, we aim to gain an understanding of the neighbourhood’s multiple layers : social, historical, economical and cultural.

6 February 2024, Xavier Fresquet (Sorbonne Center for Artificial Intelligence)

Title: Algorithms in the Abbey: Deep Learning and Medieval Music

Abstract: In this presentation, we will explore the impact of deep learning techniques on research at the intersection of musicology and medieval studies. We will commence by analysing the utilization of deep learning for Optical Music Recognition (OMR) within the realms of medieval musicology and computer science. Subsequently, we will investigate its applications in the analysis of medieval images, particularly in the context of musical iconography and organology. Finally, we will delve into the application of these techniques in examining the relationship between text and medieval music, with a specific focus on stylometry applied to medieval secular songs.

Xavier Fresquet serves as the Deputy Director at the Sorbonne Center for Artificial Intelligence (SCAI). Following the completion of his Ph.D. in musicology and digital humanities at Paris-Sorbonne, Xavier Fresquet joined UPMC, later becoming a part of Sorbonne University, in 2015. His research interests revolve around the intersections of musicology, medieval studies, and digital humanities, with a recent focus on machine learning. It encompasses the analysis of images depicting medieval performances, use of theoretical texts related to the Medieval musical world, and the study of musical notation. Xavier Fresquet actively participates in the Musiconis database, the most extensive repository of medieval musical iconography. Additionally, he authors a musicological blog named Mnemomed, devoted to the exploration of the Mediterranean's medieval musical heritage.

9 January 2024, Pedro Ortiz Suarez (Common Crawl Foundation) and Philipp Schneider (Research Assistant at the Chair for Digital History, Humboldt-Universität zu Berlin) ()

Title: [see below]

Abstract:

Pedro Ortiz Suarez : Annotating Multilingual Heterogeneous Web-Based Corpora

In this talk we will introduce the OSCAR project and present our recent efforts in overcoming the difficulties posed by the heterogeneity, noisiness and size of web resources; in order to produce higher quality textual data for as many languages as possible. We will also discuss recent developments in the project, including our data-processing pipelines to annotate and classify large amounts of textual data in constrained environments. Finally, we will present how the OSCAR initiative is currently collaborating with other projects in order to improve data quality and availability for low-resource languages.

Pedro Ortiz Suarez is a Senior Research Scientist at the Common Crawl Foundation. He holds a PhD in computer science and Natural Language Processing from Sorbonne Université. Pedro’s research has mainly focused on how data quality impacts ML models’ performance and how to improve these models through data-driven approaches. Pedro has been a main contributor to multiple open source Large Language Model initiatives such as CamemBERT, BLOOM and OpenGPT-X. Prior to joining the Common Crawl Foundation, Pedro was the founder of the open source project OSCAR, that provides high performance data pipelines to annotate Common Crawl’s data and make it more accessible to NLP and LLM researchers and practitioners. Pedro has also participated to many projects in information extraction and other NLP applications for both the scientific domain and Digital Humanities.

Philipp Schneider : The Digital Heraldry Projekt. A Knowledge Graph with Semantic Web Technologies and Machine Learning to study medieval visual sources

Visual communication forms an important part of medieval and early modern european culture. Especially coats of arms were widely used in different social groups and offer an important source for cultural history. This subject is at the center of the Digital Heraldry Project. Here, we created a Knowledge Graph with Semantic Web Technologies to (1) describe coats of arms, (2) trace their use over different types of historical sources and objects and link them to their images, (3) place these objects in their historical context of use, and (4) trace how and by whom coats of arms were used on these objects. Furthermore, the ontologies created for this Knowledge Graph are able to account for multiperspectivity regarding the description and interpretation of the historical sources it represents. The talk will give an overview on the project and its results with regard to the field of Digital History. Although mainly focusing on the parts of the project, dealing with symbolic AI, the presentation will also touch upon the integration of large image corpora into the Knowledge Graph through Machine Learning.

Philipp Schneider is a research assistant and PhD student in the Digital Heraldry Project, at the Chair for Digital History at Humboldt-Universität zu Berlin. His research focuses on ontology engineering and the application of Knowledge Graphs in historical studies — especially with regard to visual communication in the Middle Ages. Philipp studied history and computer science.

7 November 2023, Charles de Dampierre (Computational Social Science, École Normale Supérieure)

Title: BUNKA: An Exploration Engine for Understanding Large Datasets

Abstract: The increasing volume of digital information presents an unprecedented opportunity for solving numerous complex problems through Collective Intelligence. Yet, existing tools fail to fully harness this potential. To address these challenges, we introduce a new software called Bunka. Bunka is an Exploration Engine designed to enhance our understanding of large datasets. It achieves this by improving the epistemic quality of information through summarization and visualization, making complex data more understandable and insightful. Its core components include mixing Semantic Frames with Topic Modeling through the use of Embeddings & LLMs. We will showcase the practical application of Bunka through real-world case studies, providing a comprehensive examination of an individual's journey toward leveraging Collective Intelligence. Our tool is available at github.com/charlesdedampierre/BunkaTopics.

Bunka is funded by the CNRS, the Ministry of Culture and the PRAIRIE Institute.

3 October 2023, Mathieu Triclot (FEMTO-ST, Université de Technologie de Belfort-Montbéliard)

Title: Analyzing Video Game Player Experiences through Rhythmanalysis

Abstract: In this presentation, I will introduce a method for conducting 'rhythmanalysis' of gaming sessions, involving the collection and visualization of player inputs. This approach leads to intriguing questions about the aesthetics of video games, the interplay between the images and the hand, and the relationships between the video game experience and performing arts, such as music and dance.

7 June 2023, David Bamman (UC-Berkeley)

Title: Measuring Representation in Culture

Abstract: Much work in cultural analytics has examined questions of representation in narrative--whether through the deliberate process of watching movies or reading books and counting the people who appear on screen, or by developing algorithmic measuring devices to do so at scale. In this talk, I'll explore the use of NLP and computer vision to capture the diversity of representation in both contemporary literature and film, along with the challenges and opportunities that arise in this process. This includes not only the legal and policy challenges of working with copyrighted materials, but also in the opportunities that arise for aligning current methods in NLP with the diversity of representation we see in contemporary narrative; toward this end, I'll highlight models of referential gender that align characters in fiction with the pronouns used to describe them (he/she/they/xe/ze/etc.) rather than inferring an unknowable gender identity.

Bio: David Bamman is an associate professor in the School of Information at UC Berkeley, where he works in the areas of natural language processing and cultural analytics, applying NLP and machine learning to empirical questions in the humanities and social sciences. His research focuses on improving the performance of NLP for underserved domains like literature (including LitBank and BookNLP) and exploring the affordances of empirical methods for the study of literature and culture. Before Berkeley, he received his PhD in the School of Computer Science at Carnegie Mellon University and was a senior researcher at the Perseus Project of Tufts University. Bamman's work is supported by the National Endowment for the Humanities, National Science Foundation, the Mellon Foundation and an NSF CAREER award.

9 May 2023, Glenn Roe (CELLF, Sorbonne)

Title: The ModERN Project: Modelling Enlightenment. Reassembling Networks of Modernity through data-driven research

Abstract: The ERC-funded ModERN Project - Modelling Enlightenment. Reassembling Networks of Modernity through data-driven research – is a five-year project whose primary goal is to establish a new ‘data-driven’ literary and intellectual history of the French Enlightenment; one that is both more comprehensive and more systematic in terms of its relationship to the existing digital cultural record, and one that challenges subsequent narratives of European Modernity. To accomplish this, ModERN is deployinga unique combination of cutting-edge computational technologies, a conceptual framework that merges actor-network theory with data-driven discovery, and traditional critical and textual methods, all of which are used to scrutinise the digital archive of the Enlightenment period in France and its aftermath. Specifically, the project employs new techniques for large-scale text analysis and deep neural network language modelling developed in the digital humanities and artificial intelligence communities to identify and analyse conceptual and intertextual networks over an unprecedented collection of 18th- and 19th-century texts. In the context of the DH/AI seminar, the ModERN team will present the initial stages of the project, including corpus construction, large-scale text alignment, noise reduction using BERT and other LLMs, and preliminary network analyses.

4 April 2023, Daniel Foliard with Soumik Mallick, Julien Schuh, Marina Giardinetti, and Mohamed Salim Aissi (EyCon Project)

Title: Early conflict photography as data: an overview of the EyCon project

Abstract: The presentation will provide an overview of a project that aims at aggregating a thematic collection focusing on early conflict photography (1890-1918). The EyCon research project is experimenting with AI techniques to augment historical enquiry and data enrichment of a large visual corpus of historical photographs. It will add automatically enriched metadata to its online database and publish a prototype for the inclusion of AI functionalities into similar repositories. The team also reflects on the ethics of showing and facilitating access to a potentially contested material. The presentation will discuss the project's perimeter, its data architecture and provide case studies of how AI can be applied to late 19th c./early 20th c. photographs.

15 March 2023 (postponed from 7 February), Mélodie Boillet (LITIS, Teklia) et Dominique Stutzmann (IRHT-CNRS)

Title: Texts as images. Collaborating and training models for manuscript studies from layout segmentation to text recognition and to historical analysis

Abstract: For some years now, the analysis of medieval written sources has been greatly changed by the application of digital methods, especially image processing through artificial intelligence or machine learning. Access to texts has been fundamentally expanded by methods such as full-text indexing, automatic text recognition (Handwritten Text Recognition) and text-mining tools such as Named Entity Recognition, stylometry or the identification of textual adoptions (Text Reuse Detection). At the interface between computer vision and digital images of text sources, other fields of research have also developed, such as the analysis of layout and mise-en-page or the exploration of writing as an image from scribe identification to script classification.

In this presentation, we will discuss the methodology and the results of two interdisciplinary research projects funded by national and European institutions: HORAE - Hours: Recognition, Analysis, Edition (ANR-17-CE38-0008) and HOME - History of Medieval Europe (JPI-CH Cultural Heritage). HORAE enquires the production and circulation of books of hours - a complex type of prayer book - in the late Middle Ages and HOME addressed the constitution of registers and cartularies containing copies of legal documents.

We will present how different actors worked together for the study of manuscripts, aligning research on historical questions with technical ones, with partners joining from different disciplines (Humanities, NLP, computer vision and machine learning) and from the public and private sectors. Then, we will present specific models for document object detection (Doc-UFCN) and text recognition (PyLaia) and discuss the next steps in the historical analysis and in further research projects (Socface).

Bio: Mélodie Boillet studied data science, specializing in machine learning and computer vision. She completed her PhD in January 2023, carried out in collaboration between the University of Rouen-Normandy (LITIS) and the company Teklia. Her work focused on deep learning methods to detect objects from document images. They have been developed, among others, within the HORAE and HOME projects, and applied to many other automatic document processing projects.

Dominique Stutzmann studied history at the university Paris 1 - Sorbonne (PhD 2009), the Ecole nationale des Chartes and the EPHE-PSL (habiliation 2021). He is a research professor (directeur de recherche) with the CNRS and honorary professor at the Humboldt University in Berlin. He was the PI of the HOME and HORAE projects. His work focuses on scribal cultures in religious communities of the Middle Ages and the implementation of computer vision for the study of medieval handwritings and texts.

10 January 2023, Thea Sommerschield, ITHACA Project (Ca’ Foscari University of Venice)

Title: Restoring, dating and placing Greek inscriptions with machine learning: the Ithaca project

Abstract: Ithaca is the first deep neural network for the textual restoration, geographical attribution and chronological attribution of ancient Greek inscriptions. This AI model is designed to assist and expand the historian’s workflow, focusing on collaboration, decision support and interpretability. In this presentation, I will introduce Ithaca and guide you through the model's architecture, design decisions and visualisation aids. I will also offer a demo of how to use Ithaca for your personal research.

Bio: Thea Sommerschield is a Marie Skłodowska-Curie fellow at Ca’ Foscari University of Venice. Her research uses machine learning to study the epigraphic cultures of the ancient Mediterranean world. Since obtaining her DPhil in Ancient History (University of Oxford), she has been the Ralegh Radford Rome Awardee at the British School at Rome, Fellow in Hellenic Studies at Harvard’s CHS and Research Innovator at Google Cloud. She co-led the Pythia (2019) and Ithaca (2022) projects, and has worked extensively on Sicilian epigraphy.

6 December 2022, Sofiane Horache (Mines ParisTech) et Katherine Gruel (ENS)

Title: Recognition and Clustering of Ancient Coins

Abstract: Clustering coins according to their die is a problem that has many applications in numismatics. This clustering is crucial for understanding the economic history of tribes (especially for tribes for whom few written records exist, such as the Celts). It is a difficult task, requiring a lot of times and expertises. However, there is very little work that has been done on coin die identification. This presentation will present an automatic tool to know if two patterns have been impressed with the same tool, especially to know if two coins have been struck with the same die. Based on deep learning-based registration algorithms, the proposed method has allowed us to classify a hoard of a thousand Riedone coins dating from the 2nd century BC. This treasure allowed us to build an annotated dataset of 3D acquisitions called Riedones3D. Riedones3D is useful for Celtic coin specialists, but also for the computer vision community to develop new coin die recognition algorithms. Rigorous evaluations on Riedones3D and on other Celtic works show the interest of the proposed method.

8 November 2022, Clotilde BOUST et Claire PACHECO (C2RMF)

Title: Data Production and Analysis at C2RMF

Abstract: Le Centre de recherche et de restauration des musées de France (C2RMF) is a national center inside the Ministry of Culture, devoted to analyse and conserve artworks from French museums. The research department is doing and developing optical and chemical analysis. Several analyses are necessary to understand those complex objects, and can lead to huge volumes of data. We will present the work made by the imaging group and the particle accelerator group, with latest developments on data analysis and questions to solve.

4 October 2022, Jean-Baptiste CAMPS (École nationale des chartes) and Florian CAFIERO (SciencesPo)

Title: Affairs of Styles: Working with AI to Solve Mysterious Attributions

Abstract: Who wrote the Bible ? Did Shakespeare or Molière author their plays ? Who hides behind the pseudonym Elena Ferrante ? And can we identify a unique fingerprint in the writings of an individual, something critics call 'literary style' and linguists 'idiolect' ?

More and more accurate answers to century-old historical controversies or contemporary enigmas are being formulated by specialists of “authorship attribution” and “stylometry”, a domain at the intersection of statistics, artificial intelligence, philology and forensic linguistics. By taking a deep look into the mathematical properties of someone’s language, they can help identify the author of a text, no matter its nature. Lyric or epic poetry, ransom letters, social media posts… : all of these give a large number of clues on their authors, betrayed by their use of hardly noticeable details (function words, suffixes…) and more generally, by complex patterns in their language. In this presentation, we will tell how this discipline grew during the past 150 years, and will draw over some of the cases presented in our recent book (Affaires de style, Paris, 2022) and from our research on stylometry for ancient texts, from the Troubadours to Molière, or more contemporary material, as in our recent article on the authors behind QAnon’s infamous “Q”, with the New York Times.

7 June 2022, Sandra Brée and Thierry Paquet (Le projet POPP)

Title: Le projet POPP : Projet d’Océrisation des recensements de la Population Parisienne

Abstract: Le projet POPP (Projet d’Océrisation des recensements de la Population Parisienne) vise à élaborer une vaste base de données (12 millions d’individus) à partir des recensements nominatifs de Paris de 1926, 1931, 1936 et 1946 qui sont les seuls recensements de la population parisienne existant avant la fin du 20e siècle. Ce projet a deux intérêts principaux : méthodologique et scientifique. Au niveau de l’enrichissement de la connaissance scientifique, l’analyse de ces recensements permettrait de connaître la population parisienne pendant l’Entre-deux-Guerres dans son intégralité. L’analyse quantitative et statistique des populations est rendue particulièrement difficile en histoire en raison du manque de bases de données et l’indexation des recensements de Paris de 1926, 1931, 1941 et 1946, offre l’opportunité d’un pas important dans la connaissance de la population urbaine européenne jusqu’alors très peu étudiée. Sur le plan des sciences du traitement de l’information, ce projet bénéficie des dernières avancées en reconnaissance d’écriture manuscrite en exploitant des modèles sans segmentation (ni des lignes d’écritures ni des mots) pour transcrire automatiquement les données manuscrites tabulaires archivées dans les registres de recensement. Nous présenterons les modèles, la chaine de traitement mis en œuvre et les résultats, ainsi que la méthodologie de travail adoptée entre les deux équipes mobilisées. Ce projet ouvre des perspectives de collaboration fructueuses.

Thierry Paquet (LITIS, Université de Rouen Normandie) research interests concern machine learning, statistical pattern recognition, deep learning, for sequence modelling, with application to document image analysis and handwriting recognition. He contributed to many collaborative projects with academic or industrial partners. He is a member of the governing board of the French Association for Pattern Recognition (AFRIF), and a French representative at the IAPR governing board. He was the president of the French association Research Group on Document Analysis and Written Communication (GRCE) from 2002 to 2010. He was a member of the editorial board of the International Journal of Document Analysis and Recognition, and he is regularly invited in program committees of international conferences such as the International Conference on Frontiers in Handwriting Recognition, the International Conference on Document Analysis and Recognition, the International Conference on Pattern Recognition. From 2004 to 2012 he was in charge of the Master degree Multimedia Information Processing at the University of Rouen Normandie. He was the director of LITIS Laboratory from 2012 to 2019.

Sandra Bree (LARHRA - UMR 5190 CNRS) est historienne démographe et travaille depuis quinze ans sur des données quantitatives historiques pour ses recherches sur le déclin de la fécondité à Paris au XIXe siècle, la transition démographique urbaine ou encore l’évolution de la nuptialité et de la divortialité en France depuis la Révolution. Elle utilise très régulièrement des publications statistiques telles que les Annuaires statistiques de la ville de Paris ou de la France, les recensements de population ou encore les mouvements de la population dont elle recopie les données à la main. Elle a donc pleinement conscience du temps consacré à ce type de recueil et du temps qui serait gagné et pourrait être consacrer à l’analyse si les données étaient déjà disponibles dans des bases de données. Elle est également chargée des médias sociaux de la Société de Démographie Historique et des Annales de Démographie Historique et chargée de l’animation du département « données historiques, économiques et financières » de Progedo. Cette inscription dans le réseau des historiens quantitativistes lui permettra de promouvoir le projet et s’entourer des spécialistes en démographie historique, histoire de la famille, histoire sociale, histoire économique pour analyser la base de données créée et en tirer un colloque puis un ouvrage particulièrement complet et riche sur la population parisienne de l’Entre-deux-guerres.

10 May 2022, Vincent Christlein and Benoit Seguin ()

Title: [see below]

Abstract:

Vincent CHRISTLEIN : Machine Learning for the Analysis of Artworks and Historical Documents

In recent years, a mass digitization of document images and artworks has taken place. In many cases, this makes an exhaustive search and traveling to archives/museums unnecessary but shifts the problem to the online domain. Automatic or semi-automatic methods make it possible to search, process, and analyze this big data in new ways. For example, the historian can get estimates of different document attributes, such as the dominant script type, the date, or the potential writer. These are related to different machine learning tasks: document classification, regression and retrieval. Similarly, in visual artwork analyses, image or object retrieval may relate different artworks to each other. This talk gives an overview over several computational humanities methods developed at the pattern recognition lab focusing on retrieval tasks.

Vincent Christlein studied Computer Science, graduated in 2012 and received his PhD (Dr.-Ing.) in 2018 from the Friedrich-Alexander University of Erlangen-Nürnberg (FAU), Germany. During his studies, he worked on automatic handwriting analysis with focus on writer identification and writer retrieval. Since 2018, he has been working as a research associate at the Pattern Recognition Lab (FAU) and was promoted to Academic Councilor in 2020. He heads the Computer Vision group, which covers a wide variance of topics, e.g. environmental projects such as glacier segmentation or solar cell crack recognition, but also computational humanities topics, such as document and art analysis.

Benoit SEGUIN : Beyond the Proof-of-Concept - DHAI Methods in the Real World

In the space of Digital Humanities, Computational Methods are always used in an applied setting, driven by research questions. However, converting these research results into useful tools for researchers unfortunately rarely happens. By taking a step back on different projects I took part in as an independent engineer (large photo-archives analysis, art market price estimation, visualisation of text-reuse in architecture treatises, ...), I will try to highlight the divide between research interest and end-user interest, and come up with advice on integrating modern ML in DH projects.

Benoit Seguin studied Computer Science at École Polytechnique in Paris. After completing his PhD at EPFL in 2018, where he used Deep Learning to track visual transmissions in large iconographical collections, he has been working as an independent expert on applying Machine Learning to Cultural Heritage data for institutions such as the Getty Research Institue or the ETH Library. Recently, he also has been working on an entrepreneurial project ArtBeat.ai, looking to analyze and organize information about the Art Market.

8 March 2022, Cyran Aouameur (Sony CSL Paris)

Title: Sony CSL Music Team: AI for Artists

Abstract: The music team in Sony CSL Paris investigates how A.I. can be beneficial for artists’ creativity. We research and develop proofs of concept and prototypes aiming at showing that A.I., if well used, can actually benefit artists and creators rather than replacing them. As an introduction to the way music is processed in data-driven approaches such as machine learning, we will start presenting a bit of the story of music representation, highlighting the interplay that exists between the musical content and the way humans formalize music. Then, we will dive into generative modeling for music creation, showcasing some of our latest research work as well as some interface development work. Finally, we will discuss new opportunities offered by AI, especially regarding the human-machine interface.

1 February 2022, Christophe Spaenjers (HEC Paris)

[video]

Title: Biased Auctioneers (with Mathieu Aubry, Roman Kräussl, and Gustavo Manso)

Abstract: We construct a neural network algorithm that generates price predictions for art at auction, relying on both visual and non-visual object characteristics. We find that higher automated valuations relative to auction house pre-sale estimates are associated with substantially higher price-to-estimate ratios and lower buy-in rates, pointing to estimates’ informational inefficiency. The relative contribution of machine learning is higher for artists with less dispersed and lower average prices. Furthermore, we show that auctioneers’ prediction errors are persistent both at the artist and at the auction house level, and hence directly predictable themselves using information on past errors.

1 February 2022, Armin Pournaki (Max Planck Institute for Mathematics in the Sciences)

[video]

Title: Analysing discourse and semantics through geometrical representations

Abstract: I will present some results from my ongoing PhD research, where I use mathematical tools to model discursive and semantic spaces in order to gain insights into the mechanisms behind the construction of meaning. We combine methods from network science and natural language processing for discourse analysis on a corpus from Reddit on the topic of climate change. Activists and climate change deniers each have their own subreddit, which we analyze as distinct discursive spheres that reveal strong differences in terms of organisation and knowledge spreading.

11 January 2022, David Doukhan and Nicolas Hervé (INA – Institut National de l'Audiovisuel)

Title: [see below]

Abstract:

David DOUKHAN : Gender Equality Monitor Project (GEM)

GEM project aims to describe representation and treatment differences existing between women and men in the French-language media such as TV and radio. Automatic and semi-automatic multimodal methods are proposed to extract information from large collections of documents : speech-time, facial exposition time, OCR and automatic transcription analysis. In this presentation, we will present the methods designed for the project, together with the results obtained on large amount of data.

Nicolas HERVÉ : The French Transmedia News Observatory (OTMedia)

OTMedia is a software platform dedicated to research projects that can analyze vast quantities of diverse, multimodal, transmedia data (television, radio, Web, press agencies, Twitter feeds) linked to French and French-language news. The main purpose is to achieve quantitative transdisciplinary research between Human and Social Science and Computer Science. We will present the methodology and results of three different works : Social Media and Newsroom Production Decisions, Covid19 media coverage during the first 6 months, videos of police violence : link between Twitter and television.

7 December 2021, Matteo Valleriani, Jochen Büttner, Noga Shlomi, and Hassan El-Hajj (Max Planck Institute for the History of Science, Berlin)

[video]

Title: The Visual Apparatus of the Sphaera Corpus

Abstract: The Sphaera Corpus comprises 359 editions of early modern textbooks on geocentric cosmology. In our presentation we will focus on the visual apparatus of these books, in particular the scientific illustrations (ca. 20.000) contained therein. We present and discuss the deep learning methods by means of which we have been able to extract the illustrations and to analyze them for similarity. This computational analysis is instrumental in our historical research concerned with the evolution of a specific scientific visual language in the early modern period. We in particular aim to achieve a better understanding of the past uses of scientific illustrations as well as of the different ways in which cosmological content was understood, applied, taught, and socially conceived in the early modern period.

9 November 2021, Mylène Maignant, Tristan Dot, Nicolas Gonthier, Lucence Ing (–)

Title: [see below]

Abstract:

Nicolas Gonthier (Imagine team – LIGM lab, École des Ponts ParisTech): Deep Learning for Art Analysis : Classification and Detection

In this talk, we study the transfer of Convolutional Neural Networks (CNN) trained on natural images to visual recognition in artworks. First, we focus on transfer learning of CNN for artistic image classification. We use feature visualization techniques to highlight some characteristics of the impact of the transfer on the CNN. Another possibility is to transfer a CNN trained for object detection by providing the spatial localization of the objects within images.

Mylène Maignant (Ecole Normale Supérieure – PSL): When Data Science Meets Theatre Studies

Based on a corpus of more than 40 000 English theatre reviews going from 2010 to 2020, this presentation aims to present how Information Technology can help explore London theatre criticism from a digital perspective. Machine learning techniques, sentiment analysis and GIS will be explored to show how two opposite fields can supplement each other.

Lucence Ing (Centre Jean Mabillon, Ecole des chartes – PSL): Words Obsolescence in medieval French : A digital approach on a small corpus

The aim of the PhD research we will present is to find what factors could explain the loss of words from the 13th century to the 15th century in medieval French. This study is based on two witnesses of one text, the prose Lancelot — a famous Arthurian romance composed in the 1230's. After the ocerisation of the witnesses, the text is structured in XML/TEI and enriched with linguistic annotation, produced by a deep-learning model. The obtained lemmas are used to automatically align the texts, which enables to observe similar contexts in both witnesses and thus the evolution of words. Other computational methods are used to try to outline their semantic evolution.

Tristan Dot (Research Engineer, Observatoire de Paris – PSL): Automatic table transcription in manuscripts

Using recent Deep Learning methods, combining image segmentation and Handwritten Text Recognition, it is possible to automatically transcribe whole tables from historical documents - i.e. to recognize the tables’ structure, and to transcribe the content of their cells. We will present such a table transcription method, and discuss its applications in the particular case of medieval astronomical tables analysis.

12 October 2021, Léa Saint-Raymond (ENS-PSL), Thierry Poibeau (ENS-PSL), Mathieu Aubry (École de Ponts ParisTech), Matthieu Husson (Observatoire de Paris-PSL) (DHAI)

Title: Artificial Intelligence and digital humanities encounters around history of arts, history of sciences and language analysis

Abstract: Introductory and methodological session on the themes of the seminars.

June 8, 2021, Pablo Gervas (Universidad Complutense de Madrid)

Title: Embedded Stories and Narrative Levels: a challenge for Computational Narrative

Abstract: Stories told by a character within a story are known as embedded stories. They occur frequently in narrative and they constitute an important challenge to models of narrative interpretation. Computational procedures for interpreting a story need to account for these embedded stories in terms of how to represent them and how to process them in the context of the story acting as frame for them. The talk will explain the concept of embedded stories over some real examples of narrative, describe the challenges that their computational treatment poses, and describe a simplified computational model for the task. The proposed model is capable of representing discourses for embedded stories and interpret them onto a representation that captures their recursive structure. Some examples of application of the model to examples of stories from different domains will be presented, and some preliminary conclusions will be outlined on what embedding implies in terms of computational interpretation of narrative, and the challenges it poses for the ever-growing research efforts for the automated processing of narrative.

May 11, 2021, Benjamin Azoulay (ENS Paris-Saclay)

[Slides]

Title: Gallicagram, un nouvel outil de lexicographie : le big data sans ses gros sabots ?

Abstract: Avec son outil Ngram Viewer, l'entreprise Google pensait en 2010 fonder une nouvelle science : les culturomics. Onze ans plus tard, force est de constater que malgré les ambitieuses promesses du logiciel, son utilisation par les chercheurs reste rare. Les griefs contre Ngram Viewer sont nombreux : corpus opaque, inaccessible et difficile à maîtriser, qualité médiocre des métadonnées, etc. [Gallicagram](https://shiny.ens-paris-saclay.fr/app/gallicagram) est un outil open-source, conçu pour répondre à ces enjeux essentiels d’interprétation en tirant le meilleur profit du libre accès aux données de Gallica. Il permet une maîtrise efficace des corpus étudiés grâce à leur délimitation, en amont des traitements effectués comme à leur description, en aval, et propose différents modes d'analyse complémentaires permettant de tester rapidement une hypothèse. L'analyse du corpus de presse introduit aussi de nouvelles possibilités en histoire politique et en histoire culturelle : il permet ainsi aux chercheurs de s'approcher au plus près des évènements tout en conservant une vision macroscopique. Cette discussion visera à présenter cet outil, à décrire son fonctionnement et à en illustrer les nombreux usages possibles par plusieurs exemples concrets.

May 11, 2021, Benoît de Courson (ENS PSL)

Title: Outillage de présentation des travaux en humanités numériques : pour une approche non-métaphorique des interfaces graphiques

Abstract: L’intervention d’u2p050 portera une réflexion autour des modes de présentation en visioconférence des résultats issus des humanités numériques. Chaque voix, chaque propos est distinct et doit pouvoir bénéficier d’un outillage spécifique, comme une pensée est retranscrite avec des mots qui lui sont propres. Lors de la dernière séance du séminaire DHAI, nous avons collaboré avec l’archéologue Christophe Tuffery afin de produire une interface spécifique à son objet d’étude : l’impact du numérique dans la construction du savoir archéologique.

L’objectif de notre intervention sera d’envisager les possibilités d’une mise en exergue de la contingence qui préside à l’utilisation de tel ou tel des outils classiques de la conférence en montrant la neutralité factice que ces médias présupposent, et la nécessité d’une production singulière d’interface dans le régime de réinterprétation continue des données propres au numérique.

u2p050 proposera dans un second temps une réflexion sur le statut métaphorique qu’entretiennent les interfaces graphiques face à la littéralité supposée des interfaces textuelles (CLI) : gage de scientificité. Finalement, à partir de cette analyse entre régime métaphorique/régime littéral des interfaces, u2p050 envisagera une problématisation de l’intelligence artificielle à partir de sa scène primitive : l’article de Turing Computing Machinery and Intelligence.

u2p050 est une machine-entreprise de production audio(s)/visuelle(s) philosophique qui explore les relations entre les pensées conceptuelles et les matériaux numériques qui les enregistrent, les transforment et les diffusent. Actuellement hébergée à la Gaité Lyrique, ses expérimentations s’inscrivent à la croisée du cinéma, de l’art, de la technologie et de la philosophie.

April 13, 2021, Christophe Tuffery and Grazia Nicosia (Inrap, EUR Paris Seine Université Humanités, musée du Louvre)

Title: Patrimoine et humanités numériques. Regards croisés entre archéologie et conservation-restauration des biens culturels

Abstract: L'impact croissant des technologies numériques sur les données patrimoniales concerne aussi bien celles issues de fouilles archéologiques que celles résultant d’un suivi de l'état des œuvres dans un contexte muséal. Sur la base de recherches en cours, Christophe Tufféry et Grazia Nicosia, deux professionnels du patrimoine, évoqueront plusieurs aspects de l'incidence du numérique sur les différentes étapes du travail des archéologues, ainsi que sur celui de la documentation et de la conservation matérielle du patrimoine. Ce dialogue abordera, sous les angles scientifiques et opérationnels, les conditions de production et d'utilisation des données relatives au patrimoine archéologique et muséal.

Christophe Tufféry (Inrap) et Grazia Nicosia (Musée du Louvre) sont tous les deux actuellement doctorants à CY Cergy Paris-Université dans le cadre de l'EUR « Humanités, Création et Patrimoine », en partenariat avec l'Institut national du patrimoine.

Christophe Tufféry est géographe et archéologue. Depuis 2010, il est chargé des techniques et méthodes de relevé et d’enregistrement à la Direction Scientifique et Technique à l'Institut national de recherches archéologiques et préventives (Inrap), opérateur public national en archéologie préventive, sous la tutelle du Ministère de la Culture et du Ministère de la Recherche. Ses thèmes de recherche portent sur les méthodes et sur les techniques des relevés archéologiques et topographiques sur le terrain. Depuis 2019, il est en thèse à CY Cergy Paris Université dans le cadre de l'EUR Humanités, création et patrimoine, en partenariat avec l'Institut national du patrimoine. A partir de sa propre expérience comme archéologue et des observations qu'il a faites et qu'il continue à faire sur les opérations archéologiques de l'Inrap et d'autres partenaires scientifiques, il développe une réflexion de nature historiographique et épistémologique sur les modalités des descriptions, des notations, de l'enregistrement, et sur leur évolution sous l'influence de l'informatique depuis les années 1970 et 1980.

Après avoir suivi un double cursus en muséologie et en conservation restauration, Grazia Nicosia s’est spécialisée en conservation préventive. Elle a longtemps exercé en tant que professionnelle libérale, avant de rejoindre, en 2015, le service de la conservation préventive du Musée du Louvre, où elle est plus particulièrement chargée de gérer le marché de suivi de l’état de conservation et d’entretien des collections permanentes et des décors historiques, dans le cadre d’opération programmée en collaboration avec les départements. Actuellement doctorante à l'EUR Humanités, création et patrimoine de Paris Seine, elle conduit une recherche sur le diagnostic des biens culturels en conservation-restauration à l’ère des humanités numériques. Cette recherche vise à raffiner les ontologies du domaine, en structurant les données issues des constats d’état diachroniques réalisés dans le cadre de campagnes de suivi annuel. Son travail réinterroge quelques concepts fondamentaux de la conservation-restauration, comme ceux d’altération et d’état de référence, ainsi que les notions de changement de typologie et d’échelle.

Le collectif u2p050, actuellement hébergé à la Gaîté Lyrique, proposera un mode de présentation original et alternatif des résultats de Christophe Tufféry.

March 16, 2021, Stavros Lazaris, Alexandre Guilbaud, Tom Monnier et Mathieu Aubry (--)

[video]

Title: [voir ci dessous]

Abstract: Stavros Lazaris (CNRS, UMR Orient & Méditerranée): Voir, c’est savoir. Nous vivons entourés d’images. Elles nous portent, nous charment ou nous déçoivent et cela était également le cas, à des degrés différents bien entendu, pour l’homme médiéval. Comment les images ont-elles façonné sa pensée ? Quelle était leur nature et comment l’homme médiéval pouvait les utiliser ? La période médiévale est particulièrement propice pour mener une recherche sur la constitution d’une pensée visuelle liée aux savoirs scientifiques grâce à l'apport de l'IA. Cette présentation sera l'occasion de passer en revue un projet de recherche en Humanités numériques basé sur la reconnaissance de formes et les solutions que peut apporter l'IA dans les recherches actuelles en représentations visuelles médiévales.

Alexandre Guilbaud (Sorbonne Université, Institut de mathématiques de Jussieu - Paris Rive Gauche, Institut des sciences du calcul et des données): L’IA et la manufacture des planches au XVIIIe siècle. Nombre de dictionnaires, d’encyclopédies et de traités de la période moderne renferment des planches illustrant les textes avec plus ou moins d’autonomie selon les cas. Ces planches sont parfois – et même souvent, dans les dictionnaires et encyclopédies du XVIIIe siècle – le fruit d’emprunts partiels et d’opérations de recomposition à partir de sources antérieures possiblement diverses. Identifier ces sources puis reconstituer à partir d’elles le mode de fabrication de ces planches (ce que l’on appelle leur manufacture) permet de caractériser les opérations de mise à jour et d’adaptation des contenus et modalités de représentations iconographiques effectuées par leurs auteurs, puis de contribuer à caractériser, à plus large échelle, certains processus de circulation des savoirs au travers de l’image dans ce type de corpus. Nous donnerons des exemples concrets de cette problématique de recherche actuelle et des nouvelles potentialités ouvertes par l’IA sur l’exemple de l’Encyclopédie de Diderot et de D’Alembert (1751-1772) et de l’édition critique actuellement conduite dans le cadre de l’ENCCRE.

Tom Monnier et Mathieu Aubry (Imagine, LIGM, École des Ponts ParisTech): Extraction et mise en correspondances automatique d'images. Nous présenterons les principaux défis qu'il faudrait surmonter pour pouvoir identifier de manière complètement automatique les reprises d'image. Le premier de ces défis que nous détaillerons est l'extraction automatique d'image dans des documents historiques. Nous discuterons ensuite les problèmes liés à la mise en correspondance entre les images extraites.

February 16, 2021, Marc Smith, Oumayma Bounou (École nationale des chartes)

Title: Filigranes pour tous: Historical watermarks recognition

Abstract: We developed a web application to identify a watermark from a simple smartphone photograph by matching it to a corresponding watermark design from a database with more than 16000 designs. After describing the deep learning based recognition method that was built upon the approach of Shen et al, we will present our web application which not only can be used for watermark recognition, but can also serve as a crowdsourcing platform aiming to be enriched by its users.

Bibliography:

- Xi Shen, Ilaria Pastrolin, Oumayma Bounou, Spyros Gidaris, Marc Smith, Olivier Poncet, Mathieu Aubry, Large-Scale Historical Watermark Recognition: dataset and a new consistency-based approach, ICPR 2020

- Oumayma Bounou, Tom Monnier, Ilaria Pastrolin, Xi Shen, Christine Bénévent, Marie-Françoise Limon-Bonnet, François Bougard, Mathieu Aubry, Marc Smith, Olivier Poncet, Pierre-Guillaume Raverdy, A Web Application for Watermark Recognition, JDMDH 2020

Links:

- Humanity/Filigranes pour tous

- Computer Vision

- Web application

January 19th, 2021, Daniel Stoekl (École Pratique des Hautes Études)

Title: De la transcription automatique de manuscrits hébreux médiévaux via l'édition scientifique à l'analyse de l'intertextualité : outils et praxis autour d'eScriptorium

Abstract: Following a brief introduction to our open-source HTR infrastructure eScriptorium cum kraken I will demonstrate its application to the automatic layout segmentation, handwritten textsegmentation and paleography of Hebrew manuscripts. Using its rich (but still growing) internal functionalities and API as well as a number of external tools (Decker et alii 2011, Shmidman et alii 2018 and my own), I will deal with automatic text identification, alignment and crowdsourcing (Kuflik et al 2019, Wecker et al 2019) and how these procedures can be used to create different types of generic models for segmentation and transcription. I will show first ideas for automatically passing from a document hierarchy resulting from HTR to a text oriented model with integrated interlinear and marginal additions that can be displayed in tools like TEI-Publisher. While the methods presented are generic and applicable to most languages and scripts, special attention will be given to problems evolving from dealing with non-Latin scripts, RTL and morphologically rich languages.

Bibliography:

- Dekker, R. H., Middell, G.: Computer-Supported Collation with CollateX: Managing Textual Variance in an Environment with Varying Requirements. Supporting Digital Humanities 2011. University of Copenhagen, Denmark (2011).

- Kuflik, T. M. Lavee, A. Ohali, V. Raziel-Kretzmer, U. Schor, A. Wecker, E. Lolli, P. Signoret, D. Stökl Ben Ezra (2019) 'Tikkoun Sofrim – Combining HTR and Crowdsourcing for Automated Transcription of Hebrew Medieval Manuscripts', DH2019.

- Lapin, Hayim and Daniel Stökl Ben Ezra, eRabbinica

- Meier, Wolfgang, Magdalena Turska, TEI Processing Model Toolbox: Power To The Editor. DH 2016: 936

- Meier, Wolfgang, Turska, Magdalena, TEI-Publisher.

- Shmidman, A., Koppel, M., Porat, E.: Identification of parallel passages across a large hebrew/aramaic corpus. Journal of Data Mining and Digital Humanities, 2018

- Wecker, A. V. Raziel-Kretzmer, U. Schor, T. Kuflik, A. Ohali, D. Elovits, M. Lavee, P. Stevenson, D. Stökl Ben Ezra, (2019) 'Tikkoun Sofrim: A WebApp for Personalization and Adaptation of Crowdsourcing Transcriptions', UMAP’19 Adjunct (Larnaca. New York: ACM Press)

December 15th, 2020, Pierre-Carl Langlais (Paris-IV Sorbonne)

[video]

Title: Redefining the cultural history of newspapers with artificial intelligence: the experiments of the Numapresse project

Abstract: During the last twenty years, libraries developed massive digitization program. While this shift has significantly enhanced the accessibility cultural digital archives, it has also opened up unprecedented research opportunities. Innovative projects have recently attempted to apply large scale quantitative methods borrowed from computer science to tackle ambitious historical issues. The Numapresse project proposes a new cultural history of French newspaper from 1800, notably through the distant reading of detailed digitization outputs from the French National Library and other partners. It has recently become a pilot project of the future data labs of the French National Library. This presentation features a series of 'operationalization' of core concepts of the cultural history of the news in the context of a continuous methodological dialog with statistics, data science, and machine learning. Classic methods of text mining have been supplemented with spatial analysis of pages to deal with the complex and polyphonic editorial structures of newspapers in order to retrieve specific formats like signatures or news dispatch. The project has created a library of 'genre models' which made it possible to retrieve large collections of texts belong to leading newspaper genres in different historical settings. This approach has been extended to large collections of newspaper images through the retraining of deep learning models. The automated identification of text and image reprints also makes it possible to map the transforming ecosystem of French networks and its connection to other publication formats. The experimental work of Numapresse aims to foster a modeling ecosystem among research and library communities working on cultural heritage archives.

November 24, 2020, Philippe Gambette (Université Paris-Est Marne-la-Vallée)

[video]

Title: Alignment and text comparison for digital humanities

Abstract: This talk will provide several algorithmic approaches based on alignment or text comparison algorithms, at different scales, with applications in digital humanities. We will present an alignment-based approach for 16th and 17th century French text modernisation and show the impact of this normalisation process on automatic geographical named entity recognition.

We will also show several visualisation techniques which are useful to explore text corpora by highlighting similarities and differences between those texts at different levels. In particular, we will illustrate the use of Sankey diagrams at different levels to align various editions of the same text, such as poetry books by Marceline Desbordes-Valmore published from 1819 to 1830 or Heptameron by Marguerite de Navarre. This visualisation tool can also be used to contrast the most frequent words of two comparable corpora to highlight their differences. We will also illustrate how the use of word trees, built with the TreeCloud software, helps identifying trends in a corpus, by comparing the trees built for subsets of the corpus.

We will finally focus on stemmatology, where the analysed texts are supposed to be derived from a unique initial manuscript. We will describe a tree reconstruction algorithm designed to take linguistic input into account when building a tree describing the history of the manuscripts, as well as a list of observed variants supporting its edges.

Contributors of these works include Delphine Amstutz, Jean-Charles Bontemps, Aleksandra Chaschina, Hilde Eggermont, Raphaël Gaudy, Eleni Kogkitsidou, Gregory Kucherov, Tita Kyriacopoulou, Nadège Lechevrel, Xavier Le Roux, Claude Martineau, William Martinez, Anna-Livia Morand, Jonathan Poinhos, Caroline Trotot and Jean Véronis.

October 20, 2020, The DHAI team (DHAI)

[video]

Title: Heads and Tails: When Digital Humanities and Artificial Intelligence Meet.

Abstract: Joint presentation of the DHAI team, which serves as an introduction to this second season of DHAI.

October 9, 2020, Karine Gentelet (Université du Québec en Outaouais (Canada))

[video]

Title: Reflections on the decolonization processes and data sovereignty based on the digital and AI strategies of indigenous peoples in Canada

Abstract: This presentation will focus on the digital strategies developed by Indigenous Peoples to reaffirm their information sovereignty and how they contribute to the decolonization of data. The information that represents Indigenous Peoples is tainted by colonization and systemic practices of informational discrimination. Their initiatives of informational sovereignty and data decolonization allows data that is collected by and for them and therefore much more accurate, diversified and representative of their realities and needs. The principles developed by Indigenous Peoples not only testify to an asserted digital agency but also induce a paradigmatical shift due to the inclusion of ancestral knowledge and traditional modes of governance. It allows a new power balance within the digital ecosystem. (see below for the French version of this abstract)

October 9, 2020, Karine Gentelet (Université du Québec en Outaouais (Canada))

[video]

Title: Réflexions sur les processus de décolonisation et souveraineté des données à partir des stratégies numériques et d’IA des Peuples autochtones au Canada

Abstract: Cette présentation portera sur les stratégies numériques développées par les Peuples autochtones pour réaffirmer leur souveraineté en matière d'information et sur la manière dont ils contribuent à la décolonisation des données. Les informations qui représentent les Peuples autochtones sont biaisées du fait de la colonisation et des pratiques systémiques de discrimination informationnelle. Leurs initiatives de souveraineté informationnelle et de décolonisation des données permettent de recueillir des données par et pour eux et donc beaucoup plus précises, diversifiées et représentatives de leurs réalités et de leurs besoins. Les principes développés par les Peuples autochtones témoignent non seulement d'une agence numérique affirmée, mais induisent également un changement de paradigme dû à l'inclusion des connaissances ancestrales et des modes de gouvernance traditionnels. Ils permettent un nouvel équilibre des pouvoirs au sein de l'écosystème numérique.

June 8, 2020, Antonio Casilli (Paris School of Telecommunications (Telecom Paris))

Title: The last mile of inequality: What COVID-19 is doing to labor and automation

Abstract: The ongoing COVID-19 crisis, with it lockdowns, mass unemployment, and increased health risks, has been described as a automation-forcing event, poised to accelerate the introduction of automated processes replacing human workers. Nevertheless, a growing body of literature has emphasized the human contribution to machine learning. Especially platform-based digital labor performed by global crowds of underpaid micro-workers or extracting data from cab-hailing drivers and bike couriers, turns out to play an crucial role. Although the pandemic has been regarded as the triumph of 'smart work', telecommuting during periods of lockdown and closures concern only about 25 percents of workers. A class gradient seems to be at play, as platform-assisted telework is common among higher-income brackets, while people on lower rungs of the income ladder are more likely to hold jobs that involve physical proximity, which are deemed essential and cannot be moved online or interrupted. These include two groups of contingent workers performing what can be described as 'the last mile of logistics' (delivery, driving, maintenance and other gigs at the end of the supply chain) and the 'last mile of automation' (human-in-the-loop tasks such as data preparation, content moderation and algorithm verification). Indeed during lockdown, both logistic and micro-work platforms have reported a rise in activity – with millions signing up to be couriers, drivers, moderators, data trainers. The COVID-19 pandemic has thus given unprecedented visibility to these workers, but without increased social security. Their activities are equally carried out in public spaces, in offices, or from home—yet they generally expose workers to higher health risks with poor pay, no insurance, and no sick leave. Last mile platform workers shoulder a disproportionate share of the risk associated with ensuring economic continuity. Emerging scenarios include use of industrial actions to increase recognition and improve their working conditions. COVID-19 has opened spaces of visibility by organizing workers across Europe, South America, and the US. Since March 2020, Instacart walkouts, Glovo and Deliveroo street rallies, Amazon 'virtual walkouts' have started demanding health measures or protesting remuneration cuts.

June 4, 2020, Sietske Fransen & Leonardo Impett (Max-Planck-Institut für Kunstgeschichte)

Title: Print, Code, Data: New Media Disruptions and Scientific Visualization

Abstract: This paper discusses changes in scientific diagramming in response to new media disruptions: the printing press, and online data/research code. In the first case, the role of handwritten documents and the visual forms of scientific diagramming re-align in response to the circulational economics and medial accessibility of the printing press in early modern Europe. In the second, published research code unsettles the principle, common in the second half of the twentieth century, that a peer-reviewed article in computer science ought to outline its methods with enough detail to enable repeatability.

The printing press brought benefits as well as restrictions to the inclusion of diagrams in scientific works. Some of the downsides were that not every printer was able to manufacture separate wood blocks and/or copper plates that could contain the diagram as if hand-drawn. Instead, diagrams were often made entirely out of typeface. On the other hand, the quick spread of the use of printed books in addition to manuscripts, opened new roles for the manuscript as a medium of creativity. In the early days of print, it is therefore in manuscripts that we can find the visualization of scientific processes, which form the background to printed material.

The information sufficient for 'replicability' in computer science (which in the physical sciences has meant 'formal experimental methodology', but in computer science is epistemically closer to the research itself) had most often been included in tables, schematizations and heavily-labelled diagrams, sometimes augmented by so-called 'pseudocode' (a kind of software caricature, which cannot itself be run on a machine). The inclusion of research code thus dramatically displaces the role of scientific diagrams in machine learning research: from a notational system which ideally contains sufficient information to reproduce an algorithm (akin to electrical circuit diagrams) to a didactic visualization technique (as in schoolbook diagrams of the Carbon Cycle). In Badiou's (1968) terminology, diagrams shift from symbolic formal systems to synthetic spatializations of non-spatial processes. The relationship between 'research output' (as the commodity produced by computer-science research groups) and its constituent components (text, diagram, code, data) is further destabilized by deep learning techniques (which rely on vast amounts of training data) : no longer are algorithms published on their own, but rather trained models, assemblages of both data and software, again shifting the onus of reproducibility (and, therefore, the function of scientific notation). The changed epistemological role of neural network visualizations allows for a far greater formal instability, leading to the rich ecology of visual solutions (Alexnet, VGG, DeepFace) to the problem of notating multidimensional neural network architectures.

By comparing the impact of new media on the use, form and distribution of diagrams in the early modern period, with the impact of code on the role of diagrams in computer science publications, we are opening up a conversation about the influence of new media on science, both in history and in current practice.

March 30, 2020, Aaron Hershkowitz (Institute for Advanced Study)

Title: The Cutting Edge of Epigraphy: Applying AI to the Identification of Stonecutters

Abstract: Inscriptions are a vital category of evidence about the ancient world, providing a wealth of information about subject matters and geographical regions outside of the scope of surviving literary texts. However, to be most useful inscriptions need to be situated within a chronological context: the more precise the better. This kind of chronological information can sometimes be gleaned from dating formulae or events mentioned in the inscribed texts, but very often no such guideposts survive. In these cases, epigraphers can attempt to date a given text on a comparative basis with other, firmly-dated inscriptions. This comparative dating can be done on the basis of socio-linguistic patterns or the physical shape of letter forms present in the inscription. In the latter case, a very general date can be achieved on the basis of the changing popularity of particular letter forms and shapes in a particular geographic context, or a more specific date can be achieved if the 'handwriting' of a stonecutter can be identified. Such a stonecutter would have a delimited length of activity, so that if any of his inscriptions have a firm date, a range of about thirty years or less can be provided to all other inscriptions made by him. Unfortunately, very few scholars have specialized in the ability to detect stonecutter handwriting, but as was showed by an early attempt (see Panagopoulos, Papaodysseus, Rousopoulos, Dafi, and Tracy 2009, Automatic Writer Identification of Ancient Greek Inscriptions) computer vision analysis has significant promise in this area. The Krateros Project to digitize the epigraphic squeezes of the Institute for Advanced Study is actively working to pursue this line of inquiry, recognizing it as critical for the future of epigraphy generally.

March 2, 2020, Matteo Valleriani (Technische Universität, Berlin)

Title: The Sphere. Knowledge System Evolution and the Shared Scientific Identity of Europe

Abstract: On the basis of the corpus of all early modern printed editions of commentaries on the Sphere of Sacrobosco, the lecture shows how to reconstruct the transformation process—and its mechanisms—undergone by the treatise, and so to explore the evolutionary path, between the fifteenth and the seventeenth centuries, of the scientific system pivoted around cosmological knowledge: the shared scientific identity of Europe. The sources are analyzed on three levels: text, images, and tables. From a methodological point of view the lecture will also show how data are extracted by means of machine learning and analyzed by means of an approach derived from the physics of the complex systems and network theory.

February 3, 2020, Emmanuelle Bermès and Jean-Philippe Moreux (BnF)

Title: From experimentation to community building: AI at the BnF

Abstract: Artificial intelligence has been present at the BnF for more than 10 years, at least in its 'machine learning' version, through R&D projects conducted with the image and document analysis community. But we can imagine that the rise and fall of expert systems at the beginning of the 1990s will also have questioned the BnF, as our American colleagues did: 'Artificial Intelligence and Expert Systems: Will They Change the Library?' (Linda C. Smith, F. W. Lancaster, University of Illinois, 1992).

Today, the democratization of deep learning promotes the ability to experiment and carry out in virtual autonomy, but also and above all makes possible interdisciplinary projects where expertise on content, data and processing is required. This conference will be an opportunity to present the results of such a project, dedicated to the visual indexing of Gallica's iconographic content, to share our feedback and to consider a common dynamic driven by the needs and achievements of the field of digital humanities practice.

The presentation will place these experiments in the BnF's overall strategy for services to the researchers, but will also broaden the scope by addressing the overall positioning of libraries with regard to AI.

January 6, 2020, Jean-Baptiste Camps (ENC)

Title: Philology, old texts and machine learning

Abstract: Phrase de présentation: I will give an introduction to machine learning techniques applied to old documents (manuscripts) and texts, ranging from text acquisition (e.g. handwritten text recognition) to computational data analysis (e.g. authorship attribution).

January 6, 2020, Alexandre Guilbaud and Stavros Lazaris (Université Pierre et Marie Curie / CNRS)

Title: La circulation de l’illustration scientifique au Moyen-Âge et à l’époque moderne

Abstract: Nous vivons entourés d’images. Elles nous portent, nous charment ou nous déçoivent et cela était également le cas, à des degrés différents bien entendu, pour l’homme durant le Moyen Age et l’époque moderne. Comment les images ont-elles façonné sa pensée dans le domaine des sciences et dans quelles mesures en sont-elles représentatives ? Quelle était la nature des illustrations scientifiques et comment les acteurs de ces époques les ont-t-il mises au point et utilisées ? Les périodes médiévale et moderne sont particulièrement propices pour mener une recherche sur la constitution d’une pensée visuelle liée aux savoirs scientifiques. Cet exposé sera l'occasion de présenter un projet de recherche en Humanités numériques visant à contribuer à cette problématique en examinant de quelle façon les développements actuels dans les domaines de l’IA et de la vision artificielle permettent d’envisager des approches nouvelles pour l’analyse historique de la circulation de l’illustration scientifique au cours de ces deux périodes. Nous présenterons à cette occasion les corpus sélectionnés pour cette étude (les manuscrits contenant le Physiologus et le De Materia medica de Dioscoride pour le Moyen Age ; les planches d’histoire naturelle et sciences mathématiques dans le corpus des dictionnaires et encyclopédies au XVIIIe siècle) et montrerons, sur des exemples, comment les modes de circulation qui sont à l’œuvre dans ces corpus appellent notamment le développement de nouvelles techniques, basées sur la reconnaissance des formes et la mise en relation entre textes et images.

January 6, 2020, Véronique Burnod (Conservateur en chef des musées de France)

Title: Comment les historiens d'art peuvent-ils contribuer au deep learning?

Abstract: Certaines oeuvres d'art doivent être identifiées en l'absence d'archives, voire même au travers de repeints, ce qui complique la donne. L'étude des 'points informatifs' nous renseigne sur l'artiste et sur notre compréhension de ce dernier. Elle nous apporte un éclairage inédit lorsque l'oeuvre n'est pas lisible (mauvaises restaurations, par exemple). Cette nouvelle méthode d'analyse donne d'excellents résultats comme le démontreront les découvertes d'une étude de Michel Ange pour le Jugement dernier de la Sixtine (acquise ensuite par le Louvre) et de 'la Dormeuse de Naples' d'Ingres, une oeuvre mythique disparue parmi les plus recherchées au monde. Cette expertise autorise désormais les historiens d'art qui sont formés à cette discipline à structurer les banques d'images des musées de France. Des travaux d'étudiants réalisés à Lille 3 en donnent la preuve. Désormais l'objectif est de rôder les systèmes en IA sur les banques d'images des Musées de France en lien avec le service de Jean Ponce. Mais comment avancer tant que cette discipline scientifique ne sera pas reconnue chez les Historiens d'Art aux plans national et international ? Actuellement cela paralyse les travaux possibles en lien avec l'IA, l'envergure et l'incidence du projet nécessitant une véritable expertise dans ce domaine.

December 2, 2019, Mathieu Aubry (ENPC)

Title: Machine learning and text analysis for digital humanities

Abstract: I will present key concepts and challenges of Deep Learning approaches and in particular their applications on images for digital humanities. The presentation will use three concrete examples to introduce these concepts and challenges: artwork price prediction, historical watermark recognition, and pattern recognition and discovery in artwork datasets.

November 5, 2019, Thierry Poibeau, Mathilde Roussel and Matthieu Raffard, Tim Van De Cruys (Lattice (CNRS)/ IRIT (Toulouse))

[video]

[Slides]

Title: Oupoco, l’ouvroir de poésie potentielle (Thierry Poibeau)

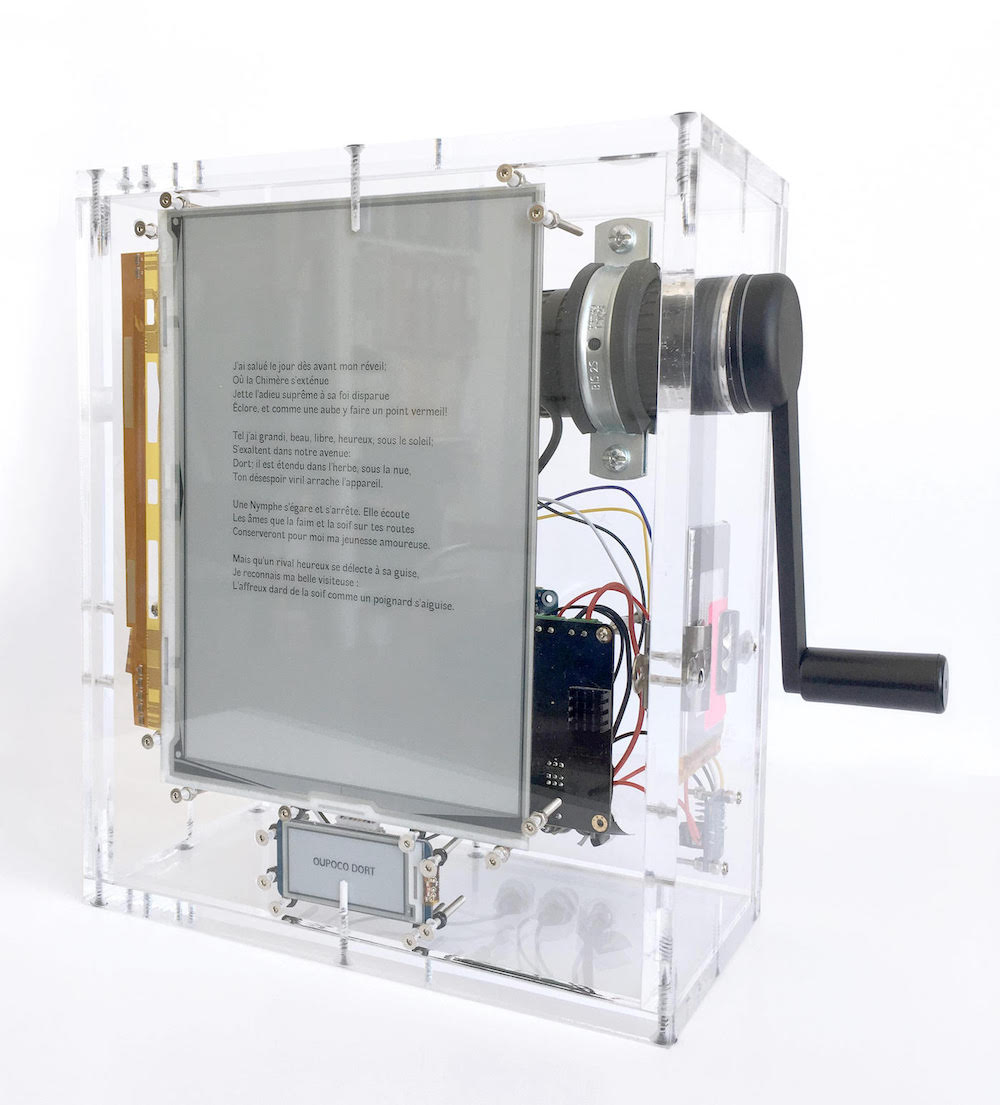

Abstract: Oupoco, l’ouvroir de poésie potentielle. Thierry Poibeau, Lattice (Paris). La présentation portera sur le projet Oupoco, qui est largement inspiré de l’ouvrage de Raymond Queneau « Cent mille milliards de poèmes », paru en 1961. Dans cet ouvrage, Queneau propose 10 sonnets dont tous les vers riment, ce qui permet de les combiner librement pour composer des poèmes respectant la forme du sonnet. Dans le cadre d’Oupoco, les poèmes de Queneau ont été remplacés par des sonnets du 19e siècle, qui sont à la fois libres de droit et plus variés quant à leur forme et leur structure. Un module d’analyse (structure globale, type de rimes, etc.) a été mis en place et les informations ainsi obtenues servent de base au générateur produisant des sonnets respectant les règles propres à ce genre. Au-delà de l’aspect ludique du projet, celui-ci pose des questions quant au statut de l’auteur, et quant à la cohérence et la pertinence des poèmes produits. Il suscite aussi la curiosité, et amène par exemple souvent le lecteur à revenir aux sonnets source pour vérifier quel est le sens original d'un vers donné. Finalement certaines extensions récentes du projet seront présentés, comme la « boîte à poésie », une version portative du générateur Oupoco.

Title: Présentation de la Boîte à poésie (Mathilde Roussel and Matthieu Raffard)

Abstract: Boîte à poésie, un générateur de poésie portable et basse consommation, développé dans le cadre du projet Oupoco suite à une collaboration avec l’Atelier Raffard-Roussel.

Title: La génération automatique de poésie à l'aide de réseaux de neurones (Tim Van De Cruys)

Abstract: La génération automatique de poésie est une tâche ardue pour un système informatique. Pour qu'un poème ait du sens, il est important de prendre en compte à la fois des aspects linguistiques et littéraires. Les modèles de langue basés sur les réseaux de neurones ont amélioré l'état de l'art par rapport à la modélisation prédictive de langage, mais quand ils sont entraînés sur des corpus de texte généraux, ils ne génèrent évidemment pas de poésie en soi. Dans cette présentation, on explorera comment ces modèles - entraînés sur des textes généraux - peuvent être adaptés afin de modéliser les aspects linguistiques et littéraires nécessaires pour la génération de poésie. Le cadre présenté est appliqué à la génération de poèmes en français, et évalué à l'aide d'une évaluation humaine. Le projet Oupoco est soutenu par le labex Transfers et l’EUR Translitterae.

October 7, 2019, Léa Saint-Raymond / Béatrice Joyeux-Prunel for the DHAI organizing members (ENS)

[Slides]

Title: When Digital Humanities meet Artificial Intelligence, an Introduction

Abstract: Introductory and methodological session on the themes of the seminars

September 17, 2019, Alexei Efros (UC Berkeley)

Title: Finding Visual Patterns in Large Photo Collections for Visualization, Analytics, and Artistic Expression

Abstract: Our world is drowning in a data deluge, and much of this data is visual. Humanity has captured over one trillion photographs last year alone. 500 hours of video is being uploaded to YouTube every minute. In fact, there is so much visual data out there already that much of it might never be seen by a human being! But unlike other types of 'Big Data', such as text, much of the visual content cannot be easily indexed or searched, making it Internet’s 'digital dark matter' [Perona 2010]. In this talk, I will first discuss some of the unique challenges that make visual data difficult compared to other types of content. I will them present some of our work on navigating, visualizing, and mining for visual patterns in large-scale image collections. Example data sources will include user-contributed Flickr photographs, Google StreetView imagery of entire cities, a hundred years of high school student portraits, and a collection of paintings attributed to Jan Brueghel. I will also show how recent progress in using deep learning as a way to find visual patterns and correlations could be used to synthesize novel visual content using 'image-to-image translation' paradigm. I will conclude with examples of contemporary artists using our work as a new tool for artistic visual expression.

October 22, 2019, Emily L. Spratt (Columbia)

Title: Art, Ethics, and AI: Problems in the Hermeneutics of the Digital Image

Abstract: In the last five years, the nature of historical inquiry has undergone a radical transformation as the use of AI-enhanced search engines has become the predominant mode of knowledge investigation, consequentially affecting our engagement with images. In this system, the discovery of responses to our every question is facilitated as the vast stores of digital information that we have come to call the data universe are conjured to deliver answers that are commensurate with our human scale of comprehension, yet often exceed it. In this digital interaction it is often assumed that queries are met with complete and reliable answers, and that data is synonymous with empirical validity, despite the frequently changing structure of this mostly unsupervised repository of digital information, which in actuality projects a distortion of the physical world it represents. In this presentation, the role of vision technology and AI in navigating, analyzing, organizing, and constructing our art and art historical archives of images will be examined as a shaping force on our interpretation of the past and projection of the future. Drawing upon the observations made by Michel Foucault in The Archaeology of Knowledge that the trends toward continuity and discontinuity in descriptions of historical narratives and philosophy, respectively, are reflections of larger hermeneutic structures that in and of themselves influence knowledge formation, the question of the role of image-related data science in our humanistic interpretation of the world will be explored. Through the examples of preservationists and artists using machine learning techniques to curate and create visual information, and in consideration of the information management needs of cultural institutions, the machine-learned image will be posited as a new and radical phenomenon of our society that is altering the nature of historical interpretation itself. By extension, this presentation brings renewed attention to aesthetic theory and calls for a new philosophical paradigm of visual perception to be employed for the analysis and management of our visual culture and heritage in the age of AI, one which incorporates and actively partakes in the development of computer vision-based technologies.

October 22, 2019, Emily L. Spratt (Columbia)

Title: Exhibition Film Screening of " Au-delà du Terroir, Beyond AI Art," and Discussion with Curator Emily L. Spratt